May 25, 2023

Here’s Why AI May Be Extremely Dangerous—Whether It’s Conscious or Not

Artificial intelligence algorithms will soon reach a point of rapid self-improvement that threatens our ability to control them and poses great potential risk to humanity

By Tamlyn Hunt

devrimb/Getty Images

“The idea that this stuff could actually get smarter than people.... I thought it was way off…. Obviously, I no longer think that,” Geoffrey Hinton, one of Google's top artificial intelligence scientists, also known as “ the godfather of AI ,” said after he quit his job in April so that he can warn about the dangers of this technology .

He’s not the only one worried. A 2023 survey of AI experts found that 36 percent fear that AI development may result in a “nuclear-level catastrophe.” Almost 28,000 people have signed on to an open letter written by the Future of Life Institute, including Steve Wozniak, Elon Musk, the CEOs of several AI companies and many other prominent technologists, asking for a six-month pause or a moratorium on new advanced AI development.

As a researcher in consciousness, I share these strong concerns about the rapid development of AI, and I am a co-signer of the Future of Life open letter.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing . By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Why are we all so concerned? In short: AI development is going way too fast.

The key issue is the profoundly rapid improvement in conversing among the new crop of advanced "chatbots," or what are technically called “large language models” (LLMs). With this coming “AI explosion,” we will probably have just one chance to get this right.

If we get it wrong, we may not live to tell the tale. This is not hyperbole.

This rapid acceleration promises to soon result in “artificial general intelligence” (AGI), and when that happens, AI will be able to improve itself with no human intervention. It will do this in the same way that, for example, Google’s AlphaZero AI learned how to play chess better than even the very best human or other AI chess players in just nine hours from when it was first turned on. It achieved this feat by playing itself millions of times over.

A team of Microsoft researchers analyzing OpenAI’s GPT-4 , which I think is the best of the new advanced chatbots currently available, said it had, "sparks of advanced general intelligence" in a new preprint paper .

In testing GPT-4, it performed better than 90 percent of human test takers on the Uniform Bar Exam, a standardized test used to certify lawyers for practice in many states. That figure was up from just 10 percent in the previous GPT-3.5 version, which was trained on a smaller data set. They found similar improvements in dozens of other standardized tests.

Most of these tests are tests of reasoning. This is the main reason why Bubeck and his team concluded that GPT-4 “could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system.”

This pace of change is why Hinton told the New York Times : "Look at how it was five years ago and how it is now. Take the difference and propagate it forwards. That’s scary.” In a mid-May Senate hearing on the potential of AI, Sam Altman, the head of OpenAI called regulation “crucial.”

Once AI can improve itself, which may be not more than a few years away, and could in fact already be here now, we have no way of knowing what the AI will do or how we can control it. This is because superintelligent AI (which by definition can surpass humans in a broad range of activities) will—and this is what I worry about the most—be able to run circles around programmers and any other human by manipulating humans to do its will; it will also have the capacity to act in the virtual world through its electronic connections, and to act in the physical world through robot bodies.

This is known as the “control problem” or the “alignment problem” (see philosopher Nick Bostrom’s book Superintelligence for a good overview ) and has been studied and argued about by philosophers and scientists, such as Bostrom, Seth Baum and Eliezer Yudkowsky , for decades now.

I think of it this way: Why would we expect a newborn baby to beat a grandmaster in chess? We wouldn’t. Similarly, why would we expect to be able to control superintelligent AI systems? (No, we won’t be able to simply hit the off switch, because superintelligent AI will have thought of every possible way that we might do that and taken actions to prevent being shut off.)

Here’s another way of looking at it: a superintelligent AI will be able to do in about one second what it would take a team of 100 human software engineers a year or more to complete. Or pick any task, like designing a new advanced airplane or weapon system, and superintelligent AI could do this in about a second.

Once AI systems are built into robots, they will be able to act in the real world, rather than only the virtual (electronic) world, with the same degree of superintelligence, and will of course be able to replicate and improve themselves at a superhuman pace.

Any defenses or protections we attempt to build into these AI “gods,” on their way toward godhood, will be anticipated and neutralized with ease by the AI once it reaches superintelligence status. This is what it means to be superintelligent.

We won’t be able to control them because anything we think of, they will have already thought of, a million times faster than us. Any defenses we’ve built in will be undone, like Gulliver throwing off the tiny strands the Lilliputians used to try and restrain him.

Some argue that these LLMs are just automation machines with zero consciousness , the implication being that if they’re not conscious they have less chance of breaking free from their programming. Even if these language models, now or in the future, aren’t at all conscious, this doesn’t matter. For the record, I agree that it’s unlikely that they have any actual consciousness at this juncture—though I remain open to new facts as they come in.

Regardless, a nuclear bomb can kill millions without any consciousness whatsoever. In the same way, AI could kill millions with zero consciousness, in a myriad ways, including potentially use of nuclear bombs either directly (much less likely) or through manipulated human intermediaries (more likely).

So, the debates about consciousness and AI really don’t figure very much into the debates about AI safety.

Yes, language models based on GPT-4 and many other models are already circulating widely . But the moratorium being called for is to stop development of any new models more powerful than 4.0—and this can be enforced, with force if required. Training these more powerful models requires massive server farms and energy. They can be shut down.

My ethical compass tells me that it is very unwise to create these systems when we know already we won’t be able to control them, even in the relatively near future. Discernment is knowing when to pull back from the edge. Now is that time.

We should not open Pandora’s box any more than it already has been opened.

This is an opinion and analysis article, and the views expressed by the author or authors are not necessarily those of Scientific American.

SQ10. What are the most pressing dangers of AI?

Main navigation, related documents.

2019 Workshops

2020 Study Panel Charge

Download Full Report

AAAI 2022 Invited Talk

Stanford HAI Seminar 2023

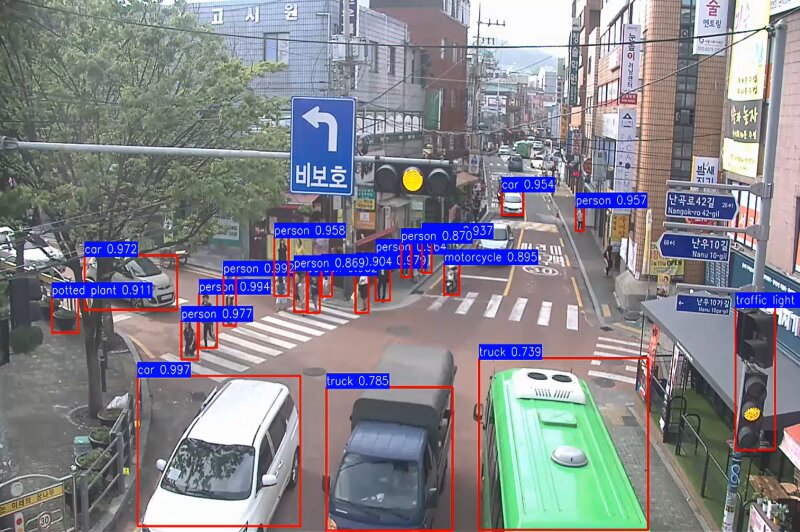

As AI systems prove to be increasingly beneficial in real-world applications, they have broadened their reach, causing risks of misuse, overuse, and explicit abuse to proliferate. As AI systems increase in capability and as they are integrated more fully into societal infrastructure, the implications of losing meaningful control over them become more concerning. 1 New research efforts are aimed at re-conceptualizing the foundations of the field to make AI systems less reliant on explicit, and easily misspecified, objectives. 2 A particularly visible danger is that AI can make it easier to build machines that can spy and even kill at scale . But there are many other important and subtler dangers at present.

In this section

Techno-solutionism, dangers of adopting a statistical perspective on justice, disinformation and threat to democracy, discrimination and risk in the medical setting.

One of the most pressing dangers of AI is techno-solutionism, the view that AI can be seen as a panacea when it is merely a tool. 3 As we see more AI advances, the temptation to apply AI decision-making to all societal problems increases. But technology often creates larger problems in the process of solving smaller ones. For example, systems that streamline and automate the application of social services can quickly become rigid and deny access to migrants or others who fall between the cracks. 4

When given the choice between algorithms and humans, some believe algorithms will always be the less-biased choice. Yet, in 2018, Amazon found it necessary to discard a proprietary recruiting tool because the historical data it was trained on resulted in a system that was systematically biased against women. 5 Automated decision-making can often serve to replicate, exacerbate, and even magnify the same bias we wish it would remedy.

Indeed, far from being a cure-all, technology can actually create feedback loops that worsen discrimination. Recommendation algorithms, like Google’s page rank, are trained to identify and prioritize the most “relevant” items based on how other users engage with them. As biased users feed the algorithm biased information, it responds with more bias, which informs users’ understandings and deepens their bias, and so on. 6 Because all technology is the product of a biased system, 7 techno-solutionism’s flaws run deep: 8 a creation is limited by the limitations of its creator.

Automated decision-making may produce skewed results that replicate and amplify existing biases. A potential danger, then, is when the public accepts AI-derived conclusions as certainties. This determinist approach to AI decision-making can have dire implications in both criminal and healthcare settings. AI-driven approaches like PredPol, software originally developed by the Los Angeles Police Department and UCLA that purports to help protect one in 33 US citizens, 9 predict when, where, and how crime will occur. A 2016 case study of a US city noted that the approach disproportionately projected crimes in areas with higher populations of non-white and low-income residents. 10 When datasets disproportionately represents the lower power members of society, flagrant discrimination is a likely result.

Sentencing decisions are increasingly decided by proprietary algorithms that attempt to assess whether a defendant will commit future crimes, leading to concerns that justice is being outsourced to software. 11 As AI becomes increasingly capable of analyzing more and more factors that may correlate with a defendant's perceived risk, courts and society at large may mistake an algorithmic probability for fact. This dangerous reality means that an algorithmic estimate of an individual’s risk to society may be interpreted by others as a near certainty—a misleading outcome even the original tool designers warned against. Even though a statistically driven AI system could be built to report a degree of credence along with every prediction, 12 there’s no guarantee that the people using these predictions will make intelligent use of them. Taking probability for certainty means that the past will always dictate the future.

There is an aura of neutrality and impartiality associated with AI decision-making in some corners of the public consciousness, resulting in systems being accepted as objective even though they may be the result of biased historical decisions or even blatant discrimination. All data insights rely on some measure of interpretation. As a concrete example, an audit of a resume-screening tool found that the two main factors it associated most strongly with positive future job performance were whether the applicant was named Jared, and whether he played high school lacrosse. 13 Undesirable biases can be hidden behind both the opaque nature of the technology used and the use of proxies, nominally innocent attributes that enable a decision that is fundamentally biased. An algorithm fueled by data in which gender, racial, class, and ableist biases are pervasive can effectively reinforce these biases without ever explicitly identifying them in the code.

Without transparency concerning either the data or the AI algorithms that interpret it, the public may be left in the dark as to how decisions that materially impact their lives are being made. Lacking adequate information to bring a legal claim, people can lose access to both due process and redress when they feel they have been improperly or erroneously judged by AI systems. Large gaps in case law make applying Title VII—the primary existing legal framework in the US for employment discrimination—to cases of algorithmic discrimination incredibly difficult. These concerns are exacerbated by algorithms that go beyond traditional considerations such as a person’s credit score to instead consider any and all variables correlated to the likelihood that they are a safe investment. A statistically significant correlation has been shown among Europeans between loan risk and whether a person uses a Mac or PC and whether they include their name in their email address—which turn out to be proxies for affluence. 14 Companies that use such attributes, even if they do indeed provide improvements in model accuracy, may be breaking the law when these attributes also clearly correlate with a protected class like race. Loss of autonomy can also result from AI-created “information bubbles” that narrowly constrict each individual’s online experience to the point that they are unaware that valid alternative perspectives even exist.

AI systems are being used in the service of disinformation on the internet, giving them the potential to become a threat to democracy and a tool for fascism. From deepfake videos to online bots manipulating public discourse by feigning consensus and spreading fake news, 15 there is the danger of AI systems undermining social trust. The technology can be co-opted by criminals, rogue states, ideological extremists, or simply special interest groups, to manipulate people for economic gain or political advantage. Disinformation poses serious threats to society, as it effectively changes and manipulates evidence to create social feedback loops that undermine any sense of objective truth. The debates about what is real quickly evolve into debates about who gets to decide what is real, resulting in renegotiations of power structures that often serve entrenched interests. 16

While personalized medicine is a good potential application of AI, there are dangers. Current business models for AI-based health applications tend to focus on building a single system—for example, a deterioration predictor—that can be sold to many buyers. However, these systems often do not generalize beyond their training data. Even differences in how clinical tests are ordered can throw off predictors, and, over time, a system’s accuracy will often degrade as practices change. Clinicians and administrators are not well-equipped to monitor and manage these issues, and insufficient thought given to the human factors of AI integration has led to oscillation between mistrust of the system (ignoring it) and over-reliance on the system (trusting it even when it is wrong), a central concern of the 2016 AI100 report.

These concerns are troubling in general in the high-risk setting that is healthcare, and even more so because marginalized populations—those that already face discrimination from the health system from both structural factors (like lack of access) and scientific factors (like guidelines that were developed from trials on other populations)—may lose even more. Today and in the near future, AI systems built on machine learning are used to determine post-operative personalized pain management plans for some patients and in others to predict the likelihood that an individual will develop breast cancer. AI algorithms are playing a role in decisions concerning distributing organs, vaccines, and other elements of healthcare. Biases in these approaches can have literal life-and-death stakes.

In 2019, the story broke that Optum, a health-services algorithm used to determine which patients may benefit from extra medical care, exhibited fundamental racial biases. The system designers ensured that race was precluded from consideration, but they also asked the algorithm to consider the future cost of a patient to the healthcare system. 17 While intended to capture a sense of medical severity, this feature in fact served as a proxy for race: controlling for medical needs, care for Black patients averages $1,800 less per year.

New technologies are being developed every day to treat serious medical issues. A new algorithm trained to identify melanomas was shown to be more accurate than doctors in a recent study, but the potential for the algorithm to be biased against Black patients is significant as the algorithm was trained using majority light-skinned groups. 18 The stakes are especially high for melanoma diagnoses, where the five-year survival rate is 17 percentage points less for Black Americans than white. While technology has the potential to generate quicker diagnoses and thus close this survival gap, a machine-learning algorithm is only as good as its data set. An improperly trained algorithm could do more harm than good for patients at risk, missing cancers altogether or generating false positives. As new algorithms saturate the market with promises of medical miracles, losing sight of the biases ingrained in their outcomes could contribute to a loss of human biodiversity, as individuals who are left out of initial data sets are denied adequate care. While the exact long-term effects of algorithms in healthcare are unknown, their potential for bias replication means any advancement they produce for the population in aggregate—from diagnosis to resource distribution—may come at the expense of the most vulnerable.

[1] Brian Christian, The Alignment Problem: Machine Learning and Human Values, W. W. Norton & Company, 2020

[2] https://humancompatible.ai/app/uploads/2020/11/CHAI-2020-Progress-Report-public-9-30.pdf

[3] https://knightfoundation.org/philanthropys-techno-solutionism-problem/

[4] https://www.theguardian.com/world/2021/jan/12/french-woman-spends-three-years-trying-to-prove-she-is-not-dead ; https://virginia-eubanks.com/ (“Automating inequality”)

[5] https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G

[6] Safiya Umoja Noble, Algorithms of Oppression: How Search Engines Reinforce Racism , NYU Press, 2018

[7] Ruha Benjamin, Race After Technology: Abolitionist Tools for the New Jim Code , Polity, 2019

[8] https://www.publicbooks.org/the-folly-of-technological-solutionism-an-interview-with-evgeny-morozov/

[9] https://predpol.com/about

[10] Kristian Lum and William Isaac, “To predict and serve?” Significance , October 2016, https://rss.onlinelibrary.wiley.com/doi/epdf/10.1111/j.1740-9713.2016.00960.x

[11] Jessica M. Eaglin, “Technologically Distorted Conceptions of Punishment,” https://www.repository.law.indiana.edu/cgi/viewcontent.cgi?article=3862&context=facpub

[12] Riccardo Fogliato, Maria De-Arteaga, and Alexandra Chouldechova, “Lessons from the Deployment of an Algorithmic Tool in Child Welfare,” https://fair-ai.owlstown.net/publications/1422

[13] https://qz.com/1427621/companies-are-on-the-hook-if-their-hiring-algorithms-are-biased/

[14] https://www.fdic.gov/analysis/cfr/2018/wp2018/cfr-wp2018-04.pdf

[15] Ben Buchanan, Andrew Lohn, Micah Musser, and Katerina Sedova, “Truth, Lies, and Automation,” https://cset.georgetown.edu/publication/truth-lies-and-automation/

[16] Britt Paris and Joan Donovan, “Deepfakes and Cheap Fakes: The Manipulation of Audio and Visual Evidence,” https://datasociety.net/library/deepfakes-and-cheap-fakes/

[17] https://www.washingtonpost.com/health/2019/10/24/racial-bias-medical-algorithm-favors-white-patients-over-sicker-black-patients/ .

[18] https://www.theatlantic.com/health/archive/2018/08/machine-learning-dermatology-skin-color/567619/

Cite This Report

Michael L. Littman, Ifeoma Ajunwa, Guy Berger, Craig Boutilier, Morgan Currie, Finale Doshi-Velez, Gillian Hadfield, Michael C. Horowitz, Charles Isbell, Hiroaki Kitano, Karen Levy, Terah Lyons, Melanie Mitchell, Julie Shah, Steven Sloman, Shannon Vallor, and Toby Walsh. "Gathering Strength, Gathering Storms: The One Hundred Year Study on Artificial Intelligence (AI100) 2021 Study Panel Report." Stanford University, Stanford, CA, September 2021. Doc: http://ai100.stanford.edu/2021-report. Accessed: September 16, 2021.

Report Authors

AI100 Standing Committee and Study Panel

© 2021 by Stanford University. Gathering Strength, Gathering Storms: The One Hundred Year Study on Artificial Intelligence (AI100) 2021 Study Panel Report is made available under a Creative Commons Attribution-NoDerivatives 4.0 License (International): https://creativecommons.org/licenses/by-nd/4.0/ .

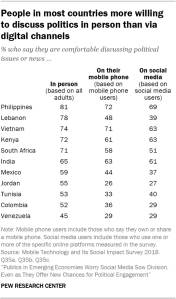

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

- Artificial Intelligence and the Future of Humans

Experts say the rise of artificial intelligence will make most people better off over the next decade, but many have concerns about how advances in AI will affect what it means to be human, to be productive and to exercise free will

Table of contents.

- 1. Concerns about human agency, evolution and survival

- 2. Solutions to address AI’s anticipated negative impacts

- 3. Improvements ahead: How humans and AI might evolve together in the next decade

- About this canvassing of experts

- Acknowledgments

Digital life is augmenting human capacities and disrupting eons-old human activities. Code-driven systems have spread to more than half of the world’s inhabitants in ambient information and connectivity, offering previously unimagined opportunities and unprecedented threats. As emerging algorithm-driven artificial intelligence (AI) continues to spread, will people be better off than they are today?

Some 979 technology pioneers, innovators, developers, business and policy leaders, researchers and activists answered this question in a canvassing of experts conducted in the summer of 2018.

The experts predicted networked artificial intelligence will amplify human effectiveness but also threaten human autonomy, agency and capabilities. They spoke of the wide-ranging possibilities; that computers might match or even exceed human intelligence and capabilities on tasks such as complex decision-making, reasoning and learning, sophisticated analytics and pattern recognition, visual acuity, speech recognition and language translation. They said “smart” systems in communities, in vehicles, in buildings and utilities, on farms and in business processes will save time, money and lives and offer opportunities for individuals to enjoy a more-customized future.

Many focused their optimistic remarks on health care and the many possible applications of AI in diagnosing and treating patients or helping senior citizens live fuller and healthier lives. They were also enthusiastic about AI’s role in contributing to broad public-health programs built around massive amounts of data that may be captured in the coming years about everything from personal genomes to nutrition. Additionally, a number of these experts predicted that AI would abet long-anticipated changes in formal and informal education systems.

Yet, most experts, regardless of whether they are optimistic or not, expressed concerns about the long-term impact of these new tools on the essential elements of being human. All respondents in this non-scientific canvassing were asked to elaborate on why they felt AI would leave people better off or not. Many shared deep worries, and many also suggested pathways toward solutions. The main themes they sounded about threats and remedies are outlined in the accompanying table.

[chart id=”21972″]

Specifically, participants were asked to consider the following:

“Please think forward to the year 2030. Analysts expect that people will become even more dependent on networked artificial intelligence (AI) in complex digital systems. Some say we will continue on the historic arc of augmenting our lives with mostly positive results as we widely implement these networked tools. Some say our increasing dependence on these AI and related systems is likely to lead to widespread difficulties.

Our question: By 2030, do you think it is most likely that advancing AI and related technology systems will enhance human capacities and empower them? That is, most of the time, will most people be better off than they are today? Or is it most likely that advancing AI and related technology systems will lessen human autonomy and agency to such an extent that most people will not be better off than the way things are today?”

Overall, and despite the downsides they fear, 63% of respondents in this canvassing said they are hopeful that most individuals will be mostly better off in 2030, and 37% said people will not be better off.

A number of the thought leaders who participated in this canvassing said humans’ expanding reliance on technological systems will only go well if close attention is paid to how these tools, platforms and networks are engineered, distributed and updated. Some of the powerful, overarching answers included those from:

Sonia Katyal , co-director of the Berkeley Center for Law and Technology and a member of the inaugural U.S. Commerce Department Digital Economy Board of Advisors, predicted, “In 2030, the greatest set of questions will involve how perceptions of AI and their application will influence the trajectory of civil rights in the future. Questions about privacy, speech, the right of assembly and technological construction of personhood will all re-emerge in this new AI context, throwing into question our deepest-held beliefs about equality and opportunity for all. Who will benefit and who will be disadvantaged in this new world depends on how broadly we analyze these questions today, for the future.”

We need to work aggressively to make sure technology matches our values. Erik Brynjolfsson

[machine learning]

Bryan Johnson , founder and CEO of Kernel, a leading developer of advanced neural interfaces, and OS Fund, a venture capital firm, said, “I strongly believe the answer depends on whether we can shift our economic systems toward prioritizing radical human improvement and staunching the trend toward human irrelevance in the face of AI. I don’t mean just jobs; I mean true, existential irrelevance, which is the end result of not prioritizing human well-being and cognition.”

Andrew McLaughlin , executive director of the Center for Innovative Thinking at Yale University, previously deputy chief technology officer of the United States for President Barack Obama and global public policy lead for Google, wrote, “2030 is not far in the future. My sense is that innovations like the internet and networked AI have massive short-term benefits, along with long-term negatives that can take decades to be recognizable. AI will drive a vast range of efficiency optimizations but also enable hidden discrimination and arbitrary penalization of individuals in areas like insurance, job seeking and performance assessment.”

Michael M. Roberts , first president and CEO of the Internet Corporation for Assigned Names and Numbers (ICANN) and Internet Hall of Fame member, wrote, “The range of opportunities for intelligent agents to augment human intelligence is still virtually unlimited. The major issue is that the more convenient an agent is, the more it needs to know about you – preferences, timing, capacities, etc. – which creates a tradeoff of more help requires more intrusion. This is not a black-and-white issue – the shades of gray and associated remedies will be argued endlessly. The record to date is that convenience overwhelms privacy. I suspect that will continue.”

danah boyd , a principal researcher for Microsoft and founder and president of the Data & Society Research Institute, said, “AI is a tool that will be used by humans for all sorts of purposes, including in the pursuit of power. There will be abuses of power that involve AI, just as there will be advances in science and humanitarian efforts that also involve AI. Unfortunately, there are certain trend lines that are likely to create massive instability. Take, for example, climate change and climate migration. This will further destabilize Europe and the U.S., and I expect that, in panic, we will see AI be used in harmful ways in light of other geopolitical crises.”

Amy Webb , founder of the Future Today Institute and professor of strategic foresight at New York University, commented, “The social safety net structures currently in place in the U.S. and in many other countries around the world weren’t designed for our transition to AI. The transition through AI will last the next 50 years or more. As we move farther into this third era of computing, and as every single industry becomes more deeply entrenched with AI systems, we will need new hybrid-skilled knowledge workers who can operate in jobs that have never needed to exist before. We’ll need farmers who know how to work with big data sets. Oncologists trained as robotocists. Biologists trained as electrical engineers. We won’t need to prepare our workforce just once, with a few changes to the curriculum. As AI matures, we will need a responsive workforce, capable of adapting to new processes, systems and tools every few years. The need for these fields will arise faster than our labor departments, schools and universities are acknowledging. It’s easy to look back on history through the lens of present – and to overlook the social unrest caused by widespread technological unemployment. We need to address a difficult truth that few are willing to utter aloud: AI will eventually cause a large number of people to be permanently out of work. Just as generations before witnessed sweeping changes during and in the aftermath of the Industrial Revolution, the rapid pace of technology will likely mean that Baby Boomers and the oldest members of Gen X – especially those whose jobs can be replicated by robots – won’t be able to retrain for other kinds of work without a significant investment of time and effort.”

Barry Chudakov , founder and principal of Sertain Research, commented, “By 2030 the human-machine/AI collaboration will be a necessary tool to manage and counter the effects of multiple simultaneous accelerations: broad technology advancement, globalization, climate change and attendant global migrations. In the past, human societies managed change through gut and intuition, but as Eric Teller, CEO of Google X, has said, ‘Our societal structures are failing to keep pace with the rate of change.’ To keep pace with that change and to manage a growing list of ‘wicked problems’ by 2030, AI – or using Joi Ito’s phrase, extended intelligence – will value and revalue virtually every area of human behavior and interaction. AI and advancing technologies will change our response framework and time frames (which in turn, changes our sense of time). Where once social interaction happened in places – work, school, church, family environments – social interactions will increasingly happen in continuous, simultaneous time. If we are fortunate, we will follow the 23 Asilomar AI Principles outlined by the Future of Life Institute and will work toward ‘not undirected intelligence but beneficial intelligence.’ Akin to nuclear deterrence stemming from mutually assured destruction, AI and related technology systems constitute a force for a moral renaissance. We must embrace that moral renaissance, or we will face moral conundrums that could bring about human demise. … My greatest hope for human-machine/AI collaboration constitutes a moral and ethical renaissance – we adopt a moonshot mentality and lock arms to prepare for the accelerations coming at us. My greatest fear is that we adopt the logic of our emerging technologies – instant response, isolation behind screens, endless comparison of self-worth, fake self-presentation – without thinking or responding smartly.”

John C. Havens , executive director of the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems and the Council on Extended Intelligence, wrote, “Now, in 2018, a majority of people around the world can’t access their data, so any ‘human-AI augmentation’ discussions ignore the critical context of who actually controls people’s information and identity. Soon it will be extremely difficult to identify any autonomous or intelligent systems whose algorithms don’t interact with human data in one form or another.”

At stake is nothing less than what sort of society we want to live in and how we experience our humanity. Batya Friedman

Batya Friedman , a human-computer interaction professor at the University of Washington’s Information School, wrote, “Our scientific and technological capacities have and will continue to far surpass our moral ones – that is our ability to use wisely and humanely the knowledge and tools that we develop. … Automated warfare – when autonomous weapons kill human beings without human engagement – can lead to a lack of responsibility for taking the enemy’s life or even knowledge that an enemy’s life has been taken. At stake is nothing less than what sort of society we want to live in and how we experience our humanity.”

Greg Shannon , chief scientist for the CERT Division at Carnegie Mellon University, said, “Better/worse will appear 4:1 with the long-term ratio 2:1. AI will do well for repetitive work where ‘close’ will be good enough and humans dislike the work. … Life will definitely be better as AI extends lifetimes, from health apps that intelligently ‘nudge’ us to health, to warnings about impending heart/stroke events, to automated health care for the underserved (remote) and those who need extended care (elder care). As to liberty, there are clear risks. AI affects agency by creating entities with meaningful intellectual capabilities for monitoring, enforcing and even punishing individuals. Those who know how to use it will have immense potential power over those who don’t/can’t. Future happiness is really unclear. Some will cede their agency to AI in games, work and community, much like the opioid crisis steals agency today. On the other hand, many will be freed from mundane, unengaging tasks/jobs. If elements of community happiness are part of AI objective functions, then AI could catalyze an explosion of happiness.”

Kostas Alexandridis , author of “Exploring Complex Dynamics in Multi-agent-based Intelligent Systems,” predicted, “Many of our day-to-day decisions will be automated with minimal intervention by the end-user. Autonomy and/or independence will be sacrificed and replaced by convenience. Newer generations of citizens will become more and more dependent on networked AI structures and processes. There are challenges that need to be addressed in terms of critical thinking and heterogeneity. Networked interdependence will, more likely than not, increase our vulnerability to cyberattacks. There is also a real likelihood that there will exist sharper divisions between digital ‘haves’ and ‘have-nots,’ as well as among technologically dependent digital infrastructures. Finally, there is the question of the new ‘commanding heights’ of the digital network infrastructure’s ownership and control.”

Oscar Gandy , emeritus professor of communication at the University of Pennsylvania, responded, “We already face an ungranted assumption when we are asked to imagine human-machine ‘collaboration.’ Interaction is a bit different, but still tainted by the grant of a form of identity – maybe even personhood – to machines that we will use to make our way through all sorts of opportunities and challenges. The problems we will face in the future are quite similar to the problems we currently face when we rely upon ‘others’ (including technological systems, devices and networks) to acquire things we value and avoid those other things (that we might, or might not be aware of).”

James Scofield O’Rourke , a professor of management at the University of Notre Dame, said, “Technology has, throughout recorded history, been a largely neutral concept. The question of its value has always been dependent on its application. For what purpose will AI and other technological advances be used? Everything from gunpowder to internal combustion engines to nuclear fission has been applied in both helpful and destructive ways. Assuming we can contain or control AI (and not the other way around), the answer to whether we’ll be better off depends entirely on us (or our progeny). ‘The fault, dear Brutus, is not in our stars, but in ourselves, that we are underlings.’”

Simon Biggs , a professor of interdisciplinary arts at the University of Edinburgh, said, “AI will function to augment human capabilities. The problem is not with AI but with humans. As a species we are aggressive, competitive and lazy. We are also empathic, community minded and (sometimes) self-sacrificing. We have many other attributes. These will all be amplified. Given historical precedent, one would have to assume it will be our worst qualities that are augmented. My expectation is that in 2030 AI will be in routine use to fight wars and kill people, far more effectively than we can currently kill. As societies we will be less affected by this as we currently are, as we will not be doing the fighting and killing ourselves. Our capacity to modify our behaviour, subject to empathy and an associated ethical framework, will be reduced by the disassociation between our agency and the act of killing. We cannot expect our AI systems to be ethical on our behalf – they won’t be, as they will be designed to kill efficiently, not thoughtfully. My other primary concern is to do with surveillance and control. The advent of China’s Social Credit System (SCS) is an indicator of what it likely to come. We will exist within an SCS as AI constructs hybrid instances of ourselves that may or may not resemble who we are. But our rights and affordances as individuals will be determined by the SCS. This is the Orwellian nightmare realised.”

Mark Surman , executive director of the Mozilla Foundation, responded, “AI will continue to concentrate power and wealth in the hands of a few big monopolies based on the U.S. and China. Most people – and parts of the world – will be worse off.”

William Uricchio , media scholar and professor of comparative media studies at MIT, commented, “AI and its related applications face three problems: development at the speed of Moore’s Law, development in the hands of a technological and economic elite, and development without benefit of an informed or engaged public. The public is reduced to a collective of consumers awaiting the next technology. Whose notion of ‘progress’ will prevail? We have ample evidence of AI being used to drive profits, regardless of implications for long-held values; to enhance governmental control and even score citizens’ ‘social credit’ without input from citizens themselves. Like technologies before it, AI is agnostic. Its deployment rests in the hands of society. But absent an AI-literate public, the decision of how best to deploy AI will fall to special interests. Will this mean equitable deployment, the amelioration of social injustice and AI in the public service? Because the answer to this question is social rather than technological, I’m pessimistic. The fix? We need to develop an AI-literate public, which means focused attention in the educational sector and in public-facing media. We need to assure diversity in the development of AI technologies. And until the public, its elected representatives and their legal and regulatory regimes can get up to speed with these fast-moving developments we need to exercise caution and oversight in AI’s development.”

The remainder of this report is divided into three sections that draw from hundreds of additional respondents’ hopeful and critical observations: 1) concerns about human-AI evolution, 2) suggested solutions to address AI’s impact, and 3) expectations of what life will be like in 2030, including respondents’ positive outlooks on the quality of life and the future of work, health care and education. Some responses are lightly edited for style.

Sign up for our weekly newsletter

Fresh data delivery Saturday mornings

Sign up for The Briefing

Weekly updates on the world of news & information

- Artificial Intelligence

- Emerging Technology

- Future of the Internet (Project)

- Technology Adoption

A quarter of U.S. teachers say AI tools do more harm than good in K-12 education

Many americans think generative ai programs should credit the sources they rely on, americans’ use of chatgpt is ticking up, but few trust its election information, q&a: how we used large language models to identify guests on popular podcasts, striking findings from 2023, most popular, report materials.

- Shareable quotes from experts about artificial intelligence and the future of humans

1615 L St. NW, Suite 800 Washington, DC 20036 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Age & Generations

- Coronavirus (COVID-19)

- Economy & Work

- Family & Relationships

- Gender & LGBTQ

- Immigration & Migration

- International Affairs

- Internet & Technology

- Methodological Research

- News Habits & Media

- Non-U.S. Governments

- Other Topics

- Politics & Policy

- Race & Ethnicity

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping the world. It conducts public opinion polling, demographic research, media content analysis and other empirical social science research. Pew Research Center does not take policy positions. It is a subsidiary of The Pew Charitable Trusts .

Copyright 2024 Pew Research Center

Find anything you save across the site in your account

Why We Should Think About the Threat of Artificial Intelligence

By Gary Marcus

If the New York Times ’ s latest article is to be believed, artificial intelligence is moving so fast it sometimes seems almost “ magical .” Self-driving cars have arrived; Siri can listen to your voice and find the nearest movie theatre; and I.B.M. just set the “Jeopardy”-conquering Watson to work on medicine, initially training medical students, perhaps eventually helping in diagnosis. Scarcely a month goes by without the announcement of a new A.I. product or technique. Yet, some of the enthusiasm may be premature: as I’ve noted previously, we still haven’t produced machines with common sense , vision , natural language processing, or the ability to create other machines. Our efforts at directly simulating human brains remain primitive.

Still, at some level, the only real difference between enthusiasts and skeptics is a time frame. The futurist and inventor Ray Kurzweil thinks true, human-level A.I. will be here in less than two decades. My estimate is at least double that, especially given how little progress has been made in computing common sense; the challenges in building A.I., especially at the software level, are much harder than Kurzweil lets on.

But a century from now, nobody will much care about how long it took, only what happened next. It’s likely that machines will be smarter than us before the end of the century—not just at chess or trivia questions but at just about everything, from mathematics and engineering to science and medicine. There might be a few jobs left for entertainers, writers, and other creative types, but computers will eventually be able to program themselves, absorb vast quantities of new information, and reason in ways that we carbon-based units can only dimly imagine. And they will be able to do it every second of every day, without sleep or coffee breaks.

For some people, that future is a wonderful thing. Kurzweil has written about a rapturous singularity in which we merge with machines and upload our souls for immortality; Peter Diamandis has argued that advances in A.I. will be one key to ushering in a new era of “ abundance ,” with enough food, water, and consumer gadgets for all. Skeptics like Eric Brynjolfsson and I have worried about the consequences of A.I. and robotics for employment . But even if you put aside the sort of worries about what super-advanced A.I. might do to the labor market, there’s another concern, too: that powerful A.I. might threaten us more directly, by battling us for resources.

Most people see that sort of fear as silly science-fiction drivel—the stuff of “The Terminator” and “The Matrix.” To the extent that we plan for our medium-term future, we worry about asteroids, the decline of fossil fuels, and global warming, not robots. But a dark new book by James Barrat, “ Our Final Invention: Artificial Intelligence and the End of the Human Era ,” lays out a strong case for why we should be at least a little worried.

Barrat’s core argument, which he borrows from the A.I. researcher Steve Omohundro , is that the drive for self-preservation and resource acquisition may be inherent in all goal-driven systems of a certain degree of intelligence. In Omohundro’s words, “if it is smart enough, a robot that is designed to play chess might also want to be build a spaceship,” in order to obtain more resources for whatever goals it might have. A purely rational artificial intelligence, Barrat writes, might expand “its idea of self-preservation … to include proactive attacks on future threats,” including, presumably, people who might be loathe to surrender their resources to the machine. Barrat worries that “without meticulous, countervailing instructions, a self-aware, self-improving, goal-seeking system will go to lengths we’d deem ridiculous to fulfill its goals,” even, perhaps, commandeering all the world’s energy in order to maximize whatever calculation it happened to be interested in.

Of course, one could try to ban super-intelligent computers altogether. But “the competitive advantage—economic, military, even artistic—of every advance in automation is so compelling,” Vernor Vinge, the mathematician and science-fiction author, wrote , “that passing laws, or having customs, that forbid such things merely assures that someone else will.”

If machines will eventually overtake us, as virtually everyone in the A.I. field believes, the real question is about values : how we instill them in machines, and how we then negotiate with those machines if and when their values are likely to differ greatly from our own. As the Oxford philosopher Nick Bostrom argued :

We cannot blithely assume that a superintelligence will necessarily share any of the final values stereotypically associated with wisdom and intellectual development in humans—scientific curiosity, benevolent concern for others, spiritual enlightenment and contemplation, renunciation of material acquisitiveness, a taste for refined culture or for the simple pleasures in life, humility and selflessness, and so forth. It might be possible through deliberate effort to construct a superintelligence that values such things, or to build one that values human welfare, moral goodness, or any other complex purpose that its designers might want it to serve. But it is no less possible—and probably technically easier—to build a superintelligence that places final value on nothing but calculating the decimals of pi.

The British cyberneticist Kevin Warwick once asked, “How can you reason, how can you bargain, how can you understand how that machine is thinking when it’s thinking in dimensions you can’t conceive of?”

If there is a hole in Barrat’s dark argument, it is in his glib presumption that if a robot is smart enough to play chess, it might also “want to build a spaceship”—and that tendencies toward self-preservation and resource acquisition are inherent in any sufficiently complex, goal-driven system. For now, most of the machines that are good enough to play chess, like I.B.M.’s Deep Blue, haven’t shown the slightest interest in acquiring resources.

But before we get complacent and decide there is nothing to worry about after all, it is important to realize that the goals of machines could change as they get smarter. Once computers can effectively reprogram themselves, and successively improve themselves, leading to a so-called “ technological singularity ” or “intelligence explosion,” the risks of machines outwitting humans in battles for resources and self-preservation cannot simply be dismissed.

One of the most pointed quotes in Barrat’s book belongs to the legendary serial A.I. entrepreneur Danny Hillis, who likens the upcoming shift to one of the greatest transitions in the history of biological evolution: “We’re at that point analogous to when single-celled organisms were turning into multi-celled organisms. We are amoeba and we can’t figure out what the hell this thing is that we’re creating.”

Already, advances in A.I. have created risks that we never dreamt of. With the advent of the Internet age and its Big Data explosion, “large amounts of data is being collected about us and then being fed to algorithms to make predictions,” Vaibhav Garg , a computer-risk specialist at Drexel University, told me. “We do not have the ability to know when the data is being collected, ensure that the data collected is correct, update the information, or provide the necessary context.” Few people would have even dreamt of this risk even twenty years ago. What risks lie ahead? Nobody really knows, but Barrat is right to ask.

Photograph by John Vink/Magnum.

By signing up, you agree to our User Agreement and Privacy Policy & Cookie Statement . This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

By Richard Brody

By Katy Waldman

By Justin Chang

Major new report explains the risks and rewards of artificial intelligence

AI has begun to permeate every aspect of our lives. Image: Unsplash/Hitesh Choudhary

.chakra .wef-1c7l3mo{-webkit-transition:all 0.15s ease-out;transition:all 0.15s ease-out;cursor:pointer;-webkit-text-decoration:none;text-decoration:none;outline:none;color:inherit;}.chakra .wef-1c7l3mo:hover,.chakra .wef-1c7l3mo[data-hover]{-webkit-text-decoration:underline;text-decoration:underline;}.chakra .wef-1c7l3mo:focus,.chakra .wef-1c7l3mo[data-focus]{box-shadow:0 0 0 3px rgba(168,203,251,0.5);} Toby Walsh

Liz sonenberg.

.chakra .wef-9dduvl{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-9dduvl{font-size:1.125rem;}} Explore and monitor how .chakra .wef-15eoq1r{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;color:#F7DB5E;}@media screen and (min-width:56.5rem){.chakra .wef-15eoq1r{font-size:1.125rem;}} Artificial Intelligence is affecting economies, industries and global issues

.chakra .wef-1nk5u5d{margin-top:16px;margin-bottom:16px;line-height:1.388;color:#2846F8;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-1nk5u5d{font-size:1.125rem;}} Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:, emerging technologies.

- A new report has just been released, highlighting the changes in AI over the last 5 years, and predicted future trends.

- It was co-written by people across the world, with backgrounds in computer science, engineering, law, political science, policy, sociology and economics.

- In the last 5 years, AI has become an increasing part of our lives, revolutionizing a number of industries, but is still not free from risk.

A major new report on the state of artificial intelligence (AI) has just been released . Think of it as the AI equivalent of an Intergovernmental Panel on Climate Change report, in that it identifies where AI is at today, and the promise and perils in view.

From language generation and molecular medicine to disinformation and algorithmic bias, AI has begun to permeate every aspect of our lives.

The report argues that we are at an inflection point where researchers and governments must think and act carefully to contain the risks AI presents and make the most of its benefits.

A century-long study of AI

The report comes out of the AI100 project , which aims to study and anticipate the effects of AI rippling out through our lives over the course of the next 100 years.

AI100 produces a new report every five years: the first was published in 2016, and this is the second. As two points define a line, this second report lets us see the direction AI is taking us in.

One of us (Liz Sonenberg) is a member of the standing committee overseeing the AI100 project, and the other (Toby Walsh) was on the study panel that wrote this particular report. Members of the panel came from across the world, with backgrounds in computer science, engineering, law, political science, policy, sociology and economics.

The promises and perils of AI are becoming real

The report highlights the remarkable progress made in AI over the past five years. AI is leaving the laboratory and has entered our lives, having a “real-world impact on people, institutions, and culture”. Read the news on any given day and you’re likely to find multiple stories about some new advance in AI or some new use of AI.

For example, in natural language processing (NLP), computers can now analyse and even generate realistic human language. To demonstrate, we asked Open AI’s GPT-3 system , one of the largest neural networks ever built, to summarise the AI100 report for you. It did a pretty good job, even if the summary confronts our sense of self by being written in the first person:

In the coming decade, I expect that AI will play an increasingly prominent role in the lives of people everywhere. AI-infused services will become more common, and AI will become increasingly embedded in the daily lives of people across the world.

I believe that this will bring with it great economic and societal benefits, but that it will also require us to address the many challenges to ensure that the benefits are broadly shared and that people are not marginalised by these new technologies.

A key insight of AI research is that it is easier to build things than to understand why they work. However, defining what success looks like for an AI application is not straightforward.

For example, the AI systems that are used in healthcare to analyse symptoms, recommend diagnoses, or choose treatments are often far better than anything that could be built by a human, but their success is hard to quantify.

As a second example of the recent and remarkable progress in AI, consider the latest breakthrough from Google’s DeepMind. AlphaFold is an AI program that provides a huge step forward in our ability to predict how proteins fold.

This will likely lead to major advances in life sciences and medicine, accelerating efforts to understand the building blocks of life and enabling quicker and more sophisticated drug discovery. Most of the planet now knows to their cost how the unique shape of the spike proteins in the SARS-CoV-2 virus are key to its ability to invade our cells, and also to the vaccines developed to combat its deadly progress.

The AI100 report argues that worries about super-intelligent machines and wide-scale job loss from automation are still premature, requiring AI that is far more capable than available today. The main concern the report raises is not malevolent machines of superior intelligence to humans, but incompetent machines of inferior intelligence.

Once again, it’s easy to find in the news real-life stories of risks and threats to our democratic discourse and mental health posed by AI-powered tools. For instance, Facebook uses machine learning to sort its news feed and give each of its 2 billion users an unique but often inflammatory view of the world.

The World Economic Forum was the first to draw the world’s attention to the Fourth Industrial Revolution, the current period of unprecedented change driven by rapid technological advances. Policies, norms and regulations have not been able to keep up with the pace of innovation, creating a growing need to fill this gap.

The Forum established the Centre for the Fourth Industrial Revolution Network in 2017 to ensure that new and emerging technologies will help—not harm—humanity in the future. Headquartered in San Francisco, the network launched centres in China, India and Japan in 2018 and is rapidly establishing locally-run Affiliate Centres in many countries around the world.

The global network is working closely with partners from government, business, academia and civil society to co-design and pilot agile frameworks for governing new and emerging technologies, including artificial intelligence (AI) , autonomous vehicles , blockchain , data policy , digital trade , drones , internet of things (IoT) , precision medicine and environmental innovations .

Learn more about the groundbreaking work that the Centre for the Fourth Industrial Revolution Network is doing to prepare us for the future.

Want to help us shape the Fourth Industrial Revolution? Contact us to find out how you can become a member or partner.

The time to act is now

It’s clear we’re at an inflection point: we need to think seriously and urgently about the downsides and risks the increasing application of AI is revealing. The ever-improving capabilities of AI are a double-edged sword. Harms may be intentional, like deepfake videos, or unintended, like algorithms that reinforce racial and other biases.

AI research has traditionally been undertaken by computer and cognitive scientists. But the challenges being raised by AI today are not just technical. All areas of human inquiry, and especially the social sciences, need to be included in a broad conversation about the future of the field. Minimising negative impacts on society and enhancing the positives requires consideration from across academia and with societal input.

Governments also have a crucial role to play in shaping the development and application of AI. Indeed, governments around the world have begun to consider and address the opportunities and challenges posed by AI. But they remain behind the curve.

A greater investment of time and resources is needed to meet the challenges posed by the rapidly evolving technologies of AI and associated fields. In addition to regulation, governments also need to educate. In an AI-enabled world, our citizens, from the youngest to the oldest, need to be literate in these new digital technologies.

At the end of the day, the success of AI research will be measured by how it has empowered all people, helping tackle the many wicked problems facing the planet, from the climate emergency to increasing inequality within and between countries.

AI will have failed if it harms or devalues the very people we are trying to help.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Related topics:

The agenda .chakra .wef-n7bacu{margin-top:16px;margin-bottom:16px;line-height:1.388;font-weight:400;} weekly.

A weekly update of the most important issues driving the global agenda

.chakra .wef-1dtnjt5{display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;} More on Emerging Technologies .chakra .wef-17xejub{-webkit-flex:1;-ms-flex:1;flex:1;justify-self:stretch;-webkit-align-self:stretch;-ms-flex-item-align:stretch;align-self:stretch;} .chakra .wef-nr1rr4{display:-webkit-inline-box;display:-webkit-inline-flex;display:-ms-inline-flexbox;display:inline-flex;white-space:normal;vertical-align:middle;text-transform:uppercase;font-size:0.75rem;border-radius:0.25rem;font-weight:700;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;line-height:1.2;-webkit-letter-spacing:1.25px;-moz-letter-spacing:1.25px;-ms-letter-spacing:1.25px;letter-spacing:1.25px;background:none;padding:0px;color:#B3B3B3;-webkit-box-decoration-break:clone;box-decoration-break:clone;-webkit-box-decoration-break:clone;}@media screen and (min-width:37.5rem){.chakra .wef-nr1rr4{font-size:0.875rem;}}@media screen and (min-width:56.5rem){.chakra .wef-nr1rr4{font-size:1rem;}} See all

Solar storms hit tech equipment, and other technology news you need to know

Sebastian Buckup

May 17, 2024

Generative AI is trained on just a few of the world’s 7,000 languages. Here’s why that’s a problem – and what’s being done about it

Madeleine North

Critical minerals demand has doubled in the past five years – here are some solutions to the supply crunch

Emma Charlton

May 16, 2024

6 ways satellites are helping to monitor our changing planet from space

Andrea Willige

How can GenAI be optimized for people and processes?

May 15, 2024

This is how AI can empower women and achieve gender equality, according to the founder of Girls Who Code and Moms First

Kate Whiting

May 14, 2024

MIT Technology Review

- Newsletters

The true dangers of AI are closer than we think

Forget superintelligent AI: algorithms are already creating real harm. The good news: the fight back has begun.

- Karen Hao archive page

As long as humans have built machines, we’ve feared the day they could destroy us. Stephen Hawking famously warned that AI could spell an end to civilization. But to many AI researchers, these conversations feel unmoored. It’s not that they don’t fear AI running amok—it’s that they see it already happening, just not in the ways most people would expect.

AI is now screening job candidates, diagnosing disease, and identifying criminal suspects. But instead of making these decisions more efficient or fair, it’s often perpetuating the biases of the humans on whose decisions it was trained.

William Isaac is a senior research scientist on the ethics and society team at DeepMind, an AI startup that Google acquired in 2014. He also co-chairs the Fairness, Accountability, and Transparency conference—the premier annual gathering of AI experts, social scientists, and lawyers working in this area. I asked him about the current and potential challenges facing AI development—as well as the solutions.

Q: Should we be worried about superintelligent AI?

A: I want to shift the question. The threats overlap, whether it’s predictive policing and risk assessment in the near term, or more scaled and advanced systems in the longer term. Many of these issues also have a basis in history. So potential risks and ways to approach them are not as abstract as we think.

There are three areas that I want to flag. Probably the most pressing one is this question about value alignment: how do you actually design a system that can understand and implement the various forms of preferences and values of a population? In the past few years we’ve seen attempts by policymakers, industry, and others to try to embed values into technical systems at scale—in areas like predictive policing, risk assessments, hiring, etc. It’s clear that they exhibit some form of bias that reflects society. The ideal system would balance out all the needs of many stakeholders and many people in the population. But how does society reconcile their own history with aspiration? We’re still struggling with the answers, and that question is going to get exponentially more complicated. Getting that problem right is not just something for the future, but for the here and now.

The second one would be achieving demonstrable social benefit. Up to this point there are still few pieces of empirical evidence that validate that AI technologies will achieve the broad-based social benefit that we aspire to.

Lastly, I think the biggest one that anyone who works in the space is concerned about is: what are the robust mechanisms of oversight and accountability.

Q: How do we overcome these risks and challenges?

A: Three areas would go a long way. The first is to build a collective muscle for responsible innovation and oversight. Make sure you’re thinking about where the forms of misalignment or bias or harm exist. Make sure you develop good processes for how you ensure that all groups are engaged in the process of technological design. Groups that have been historically marginalized are often not the ones that get their needs met. So how we design processes to actually do that is important.

The second one is accelerating the development of the sociotechnical tools to actually do this work. We don’t have a whole lot of tools.

The last one is providing more funding and training for researchers and practitioners—particularly researchers and practitioners of color—to conduct this work. Not just in machine learning, but also in STS [science, technology, and society] and the social sciences. We want to not just have a few individuals but a community of researchers to really understand the range of potential harms that AI systems pose, and how to successfully mitigate them.

Q: How far have AI researchers come in thinking about these challenges, and how far do they still have to go?

A: In 2016, I remember, the White House had just come out with a big data report, and there was a strong sense of optimism that we could use data and machine learning to solve some intractable social problems. Simultaneously, there were researchers in the academic community who had been flagging in a very abstract sense: “Hey, there are some potential harms that could be done through these systems.” But they largely had not interacted at all. They existed in unique silos.

Since then, we’ve just had a lot more research targeting this intersection between known flaws within machine-learning systems and their application to society. And once people began to see that interplay, they realized: “Okay, this is not just a hypothetical risk. It is a real threat.” So if you view the field in phases, phase one was very much highlighting and surfacing that these concerns are real. The second phase now is beginning to grapple with broader systemic questions.

Q: So are you optimistic about achieving broad-based beneficial AI?

A: I am. The past few years have given me a lot of hope. Look at facial recognition as an example. There was the great work by Joy Buolamwini, Timnit Gebru, and Deb Raji in surfacing intersectional disparities in accuracies across facial recognition systems [i.e., showing these systems were far less accurate on Black female faces than white male ones]. There’s the advocacy that happened in civil society to mount a rigorous defense of human rights against misapplication of facial recognition. And also the great work that policymakers, regulators, and community groups from the grassroots up were doing to communicate exactly what facial recognition systems were and what potential risks they posed, and to demand clarity on what the benefits to society would be. That’s a model of how we could imagine engaging with other advances in AI.

But the challenge with facial recognition is we had to adjudicate these ethical and values questions while we were publicly deploying the technology. In the future, I hope that some of these conversations happen before the potential harms emerge.

Q: What do you dream about when you dream about the future of AI?

A: It could be a great equalizer. Like if you had AI teachers or tutors that could be available to students and communities where access to education and resources is very limited, that’d be very empowering. And that’s a nontrivial thing to want from this technology. How do you know it’s empowering? How do you know it’s socially beneficial?

I went to graduate school in Michigan during the Flint water crisis. When the initial incidences of lead pipes emerged, the records they had for where the piping systems were located were on index cards at the bottom of an administrative building. The lack of access to technologies had put them at a significant disadvantage. It means the people who grew up in those communities, over 50% of whom are African-American, grew up in an environment where they don’t get basic services and resources.

Artificial intelligence

Sam altman says helpful agents are poised to become ai’s killer function.

Open AI’s CEO says we won’t need new hardware or lots more training data to get there.

- James O'Donnell archive page

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

- Will Douglas Heaven archive page

Is robotics about to have its own ChatGPT moment?

Researchers are using generative AI and other techniques to teach robots new skills—including tasks they could perform in homes.

- Melissa Heikkilä archive page

An AI startup made a hyperrealistic deepfake of me that’s so good it’s scary

Synthesia's new technology is impressive but raises big questions about a world where we increasingly can’t tell what’s real.

Stay connected

Get the latest updates from mit technology review.

Discover special offers, top stories, upcoming events, and more.

Thank you for submitting your email!

It looks like something went wrong.

We’re having trouble saving your preferences. Try refreshing this page and updating them one more time. If you continue to get this message, reach out to us at [email protected] with a list of newsletters you’d like to receive.

May 17, 2024

UC Berkeley's only nonpartisan political magazine

Artificial Intelligence and the Loss of Humanity

The term “artificial intelligence,” or AI, has become a buzzword in recent years. Optimists see AI as the panacea to society’s most fundamental problems, from crime to corruption to inequality, while pessimists fear that AI will overtake human intelligence and crown itself king of the world. Underlying these two seemingly antithetical views is the assumption that AI is better and smarter than humanity and will ultimately replace humanity in making decisions.

It is easy to buy into the hype of omnipotent artificial intelligence these days, as venture capitalists dump billions of dollars into tech start-ups and government technocrats boast of how AI helps them streamline municipal governance . But the hype is just hype: AI is simply not as smart as we think. The true threat of AI to humanity lies not in the power of AI itself but in the ways people are already beginning to use it to chip away at our humanity.

AI outperforms humans, but only in low-level tasks.

Artificial intelligence is a field in computer science that seeks to have computers perform certain tasks by simulating human intelligence. Although the founding fathers of AI in the 1950s and 1960s experimented with manually codifying knowledge into computer systems, most of today’s AI application is carried out via a statistical approach through machine learning, thanks to the proliferation of big data and computational power in recent years. However, today’s AI is still limited to the performance of specialized tasks, such as classifying images, recognizing patterns and generating sentences.

Although a specialized AI might outperform humans in its specific function, it does not understand the logic and principles of its actions. An AI that classifies images, for example, might label images of cats and dogs more accurately than a human, but it never knows how a cat is similar to and different from a dog. Similarly, a natural language processing (NLP) AI can train a model that projects English words onto vectors, but it does not comprehend the etymology and context of each individual word. AI performs tasks mechanically without understanding the content of the tasks, which means that it is certainly not able to outsmart its human masters in a dystopian manner and will not reach such a level for a long time, if ever.

AI does not dehumanize humans — humans do.

AI does not understand humanity, but the epistemological wall between AI and humanity is further complicated by the fact that humans do not understand AI, either. A typical AI model easily contains hundreds of thousands of parameters, whose weights are fine-tuned according to some mathematical principles in order to minimize “loss,” a rough estimate of how wrong the model is. The design of the loss function and its minimization process are often more art than science. We do not know what the weights in the model mean or how the model predicts one result rather than another. Without an explainable framework, decision-making driven by AI is a black box , unaccountable and even inhumane.

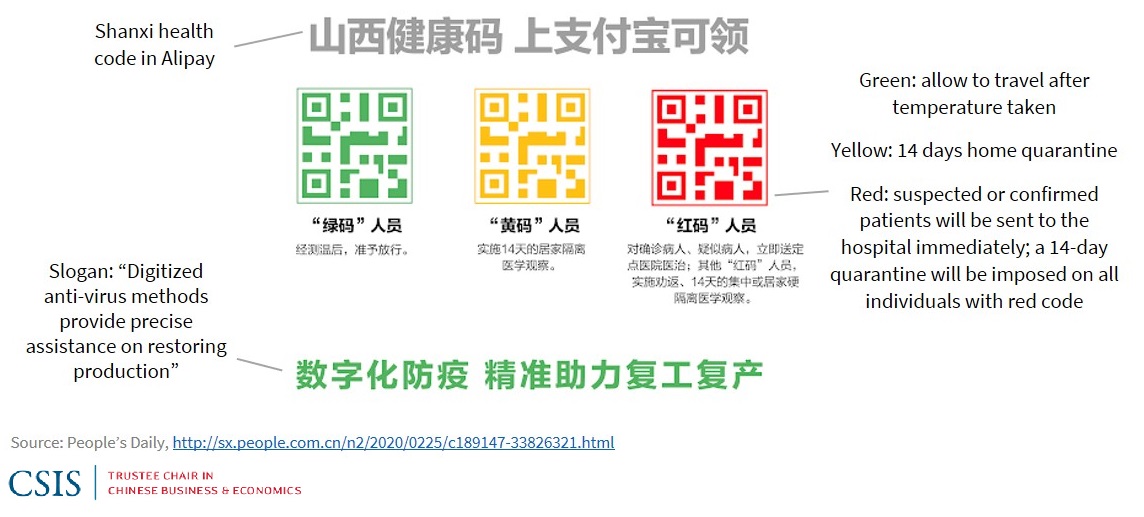

This is more than just a theoretical concern. This year in China, local authorities rolled out the so-called “health code,” a QR code assigned to each individual using an AI-powered risk assessment algorithm indicating their risk of contracting and spreading COVID-19. There have been numerous pieces of news coverage about citizens who found their health codes suddenly turning from green (low-risk) to red (high risk) for no reason. They became “digital refugees” as they were immediately banned from entering public venues, including grocery stores, which require green codes. Nobody knows how the risk assessment algorithm works under the hood, yet, in this trying time of coronavirus, it is determining people’s day-to-day lives.

AI applications can intervene in human agency.

Artificial intelligence is also transforming the medical industry. Predictive algorithms are now powering brain-computer interfaces (BCIs) that can read signals from the brain and even write in signals if necessary. For example, a BCI can identify a seizure and act to suppress the symptom, a potentially life-saving application of AI. But BCIs also create problems concerning agency. Who is controlling one’s brain — the user or the machine?

One need not plug their brain into some electronic device to face this issue of agency. The newsfeed of our social medias is constantly using artificial intelligence to push us content based on patterns from our views, likes, moves of the mouse and number of seconds we spend scrolling through a page. We are passive consumers in a deluge of information tailored to our tastes, no longer having to actively reach out to find information — because that information finds us.

AI knows nothing about culture and values.

Feeding an AI system requires data, the representation of information. Some information, such as gender, age and temperature, can be easily coded and quantified. However, there is no way to uniformly quantify complex emotions, beliefs, cultures, norms and values. Because AI systems cannot process these concepts, the best they can do is to seek to maximize benefits and minimize losses for people according to mathematical principles. This utilitarian logic, though, often contravenes what we would consider noble from a moral standpoint — prioritizing the weak over the strong, safeguarding the rights of the minority despite giving up greater overall welfare and seeking truth and justice rather than telling lies.

The fact that AI does not understand culture or values does not imply that AI is value-neutral. Rather, any AI designed by humans is implicitly value-laden. It is consciously or unconsciously imbued with the belief system of its designer. Biases in AI can come from the representativeness of the historical data, the ways in which data scientists clean and interpret the data, which categorizing buckets the model is designed to output, the choice of loss function and other design features. A more aggressive company culture, for example, might favor maximizing recall in AI, or the proportion of positives identified as positive, while a more prudent culture would encourage maximizing precision, the proportion of labelled positives that are actually positive. While such a distinction might seem trivial, in a medical setting, it can become an issue of life and death: do we try to distribute as much of a treatment as possible despite its side effects, or do we act more prudently to limit the distribution of the treatment to minimize side effects, even if many people will never get the treatment? Within a single AI model, these two goals can never be achieved simultaneously because they are mathematically opposed to each other. People have to make a choice when designing an AI system, and the choice they make will inevitably reflect the values of the designers.

Take responsibility, now.